Achieving Real Impact Navigating the Data and Regulatory Barriers to AI Adoption in Bio/pharma

Key Takeaways

- AI enhances drug manufacturing by managing complex, multivariable processes, providing actionable insights throughout the drug lifecycle.

- High-quality data is crucial for effective AI model development, posing a primary barrier to AI adoption in biopharma.

Toni Manzano, PhD, discusses his CPHI Europe presentation, stating that AI is essential for managing complex drug and biologics manufacturing.

In an interview regarding the presentation “From Science to Scale: Crossing the Hype Chasm with Industrialized GMP AI in API and Drug Manufacturing” at

PharmTech: What role do AI and digital technologies play in accelerating drug discovery and improving manufacturing?

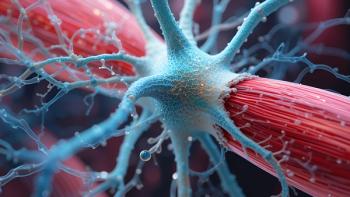

Manzano: The pharmaceutical industry works with extremely complex systems, especially when dealing with biologics, compared with classical chemistry (APIs) and genetics. These complex systems are very difficult to analyze using traditional methods.

Classical statistics, which rely on controlling one variable at a time, simply do not work for analyzing biologics, because these processes are always multi-variable and complex. This is precisely where AI brings significant value. AI is a mathematical tool and scientific instrument—a discipline that is now being taught at universities—that is exceptionally effective when managing multi-variable things. It handles many variables simultaneously, rather than one by one, in complex processes for which data are available. Ultimately, AI provides substantial understanding of the process and the capability to deliver both actionable and knowledgeable insights throughout the drug lifecycle, from discovery to manufacturing.

What is the primary barrier preventing the full adoption of AI in bio/pharma

The main barrier today is centered on data. AI is effective only if it works with data, and data is everything for AI. If a company does not have good data, they will not be able to build good AI models. Therefore, the first necessity is having digital systems capable of collecting and creating the digital data required to build those AI models.

What’s the key to successful AI scale-up within GxP constraints?

We have very positive news in the pharmaceutical sector regarding regulation. For the first time in history, regulators (FDA and EMA) have provided guidelines and good practices to apply AI in GMP before the industry fully adopts AI. This is significant because the entire regulatory plan is already prepared.

In terms of CMOs and CDMOs, this means there is no regulatory blocker in place. The GxP framework, including all the necessary guidelines and good practices for using AI, is ready. The primary operational handicap remains the need for good data as a basis, which I mentioned previously.

Why do one-size-fits-all AI models fail in bio/pharma manufacturing?

The reality is that a single AI model cannot solve the full drug manufacturing process. If you successfully model your physical or biotech process, you will find that you need multiple, specific models to replicate and predict different variables. For example, one model might predict pH, another might predict density, and yet another might be needed to detect potential anomalies or data drifting.

Companies will need at least one specific model per variable. Crucially, every single model replicates a specific combination of the product plus the equipment. The complexity arises because managing drug manufacturing accurately requires more than one AI model per product and process. If a company produces 20 to 50 products, they might need 100 models (at least two models per product).

The core problem is how to industrialize this complexity. Companies like Aizon aim to solve this by providing platforms specifically designed to industrialize AI, thereby managing the vast number of specific models needed.

How can AI systems remain compliant as they evolve or retrain?

Guidance is emerging quickly; in Europe, we have the draft Annex 22 for AI in drug manufacturing, and the FDA published very useful guidelines in January 2025 regarding the use of AI for critical decisions in drug manufacturing.

The positive development is that US and European regulatory bodies have reached an agreement and commitment centered on risk assessment. The first mandatory step is to create a risk assessment that examines how the AI model impacts the final patient based on the quality, safety, and efficiency of the drugs being produced.

Based on the risk level determined by this assessment, companies must act accordingly. For instance, if an AI model interacts with strategic control to ensure a process is always under control, the risk is considered high because the AI is assuming a primary controlling role. Conversely, if the AI model is only recommending a decision to the final user, the risk is considered low.

What role do cloud-based platforms play in AI adoption?

Drug manufacturing operations in the real world generate vast amounts of multivariate data, including time series (like density, pH) provided by sensors every second and various events (batch line clearance, CQA, CPP). We are dealing with gigabytes of information per day that must be managed to be consumed by AI systems.

If AI is used as a strategic control mechanism, this massive volume of information must be sent and processed in real-time or near real-time. The system must then provide feedback from the AI systems back to the operations to adjust, set up, or correct anything that happens instantly.

This demanding workload—requiring power computing, unlimited data storage, and real-time or near real-time connectivity—cannot be managed effectively by classical or on-premise systems. The cloud is the only system that allows this level of industrial capability.

How can organizations bridge the widening skills gap created by rapid digitalization?

Among the good practices emerging in drug manufacturing, the first and most critical is to take care of the data. Data is the foundation upon which crucial decisions are made. Site managers make decisions about batch release, and quality departments decide how to resolve deviations, all based on data.

This necessitates a specific role within the organization, such as a chief data officer (or an expanded role within classical IT departments), dedicated to ensuring data quality. This role must ensure that data is consistent and always compliant with data integrity principles. Data integrity means that data must meet at least five attributes: attributable, legible, contemporaneous, original, and accurate (ALCOA). Implementing rules and good practices in labs to ensure these principles are consistently applied to all data is essential.

Newsletter

Stay at the forefront of biopharmaceutical innovation—subscribe to BioPharm International for expert insights on drug development, manufacturing, compliance, and more.